Agentic AI Is Coming for Your Content (Whether You’re Ready or Not)

Meta just acquired Manus, a Singapore-based AI agent startup, for an undisclosed sum. That’s notable. What’s more notable: Manus hit $100 million in annual recurring revenue in just eight months—reportedly the fastest any startup has reached that milestone.

The numbers tell the story:

- 147 trillion tokens processed in 2025

- 80 million virtual computers created

- $125 million+ revenue run rate

This isn’t a chatbot. It’s autonomous software that browses the web, completes tasks, and makes decisions without human supervision.

And it’s going to be crawling your site.

What Manus Actually Does

Manus is a general-purpose AI agent. Give it a task—”find me a two-bedroom apartment in Austin,” “build me a resume,” “research competitor pricing”—and it opens a browser, navigates the web, reads content, and completes the work.

SiliconANGLE described a demo where a user asked Manus to find them a suitable apartment and “it immediately set about generating a comprehensive” search across real estate sites, filtering by criteria, and presenting options.

No prompting. No hand-holding. The agent figures out what to do.

On the GAIA benchmark—a test of real-world AI problem-solving developed by Meta AI, Hugging Face, and the AutoGPT team—Manus claims state-of-the-art performance, exceeding OpenAI’s Deep Research. For context, GPT-4 with plugins scores about 15% on GAIA. Humans average 92%.

Why Meta Bought It

Meta has spent billions on AI infrastructure without a clear return. Their Llama models, while capable, haven’t generated direct revenue. Manus changes that.

Here’s what Meta gets:

- Immediate revenue. $125M+ run rate from an existing subscription business.

- Agentic capability. The ability to deploy autonomous agents across 4 billion monthly users.

- Talent. The team that built the fastest-growing AI product of 2025.

Alexandr Wang, who runs Meta’s Superintelligence Labs (and founded Scale AI), announced the deal himself: “The Manus team in Singapore are world class at exploring the capability overhang of today’s models to scaffold powerful agents.”

Translation: They know how to make current AI models do more than anyone expected.

What This Means for Publishers

Here’s the uncomfortable part.

Those 147 trillion tokens? They came from somewhere. Manus creates 80 million virtual computers—essentially browser sessions—that crawl and read web content to complete tasks. That’s orders of magnitude more web reading than traditional search.

And unlike ChatGPT or Perplexity, which cite sources in their answers, autonomous agents often don’t surface where they got their information. They just use it.

If your content isn’t structured for AI agents to find, parse, and cite, you’re invisible to this entire category of traffic.

The shift is already happening:

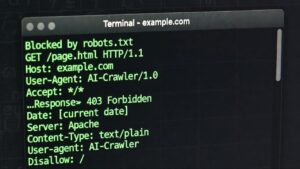

- 13.26% of AI bot requests now ignore robots.txt directives (up from 3.3% last year)

- Stealth crawlers like ChatGPT’s Atlas and Operator use Chrome user-agents, making them indistinguishable from human visitors

- 80% of AI crawling is for training, 18% for search, and just 2% for user-triggered actions

That last number is about to flip. Agentic AI is “user-triggered actions” at scale.

The robots.txt Problem

robots.txt was designed for search engine crawlers that index and link. The deal was simple: you let us crawl, we send you traffic.

AI agents break this model. They:

- Crawl content to complete tasks (not to index or link)

- May not attribute where information came from

- Often use browser-based crawling that bypasses robots.txt entirely

Cloudflare data shows 5.6 million websites now block GPTBot and 5.8 million block ClaudeBot. But blocking doesn’t work when agents don’t identify themselves.

The new question isn’t “should I allow AI crawlers?” It’s “how do I make sure AI agents can find and cite my content when they’re looking for answers?”

What Actually Works

1. llms.txt

The llms.txt specification is essentially a welcome note for AI systems. It tells agents what your site is about, what content is most important, and how to interpret your expertise.

Unlike robots.txt (which says what to avoid), llms.txt says what to prioritize. That’s the signal AI agents need.

2. Schema markup

AI agents parse structured data. Organization schema, Article schema, FAQ schema—these aren’t just for Google. They’re how autonomous systems understand what you do and whether to trust you.

3. Clear, citable content

AI agents looking for “best hiking boots for wide feet” will read your content the way a human would: scanning headings, looking for specific answers, checking if you seem authoritative.

Content that buries the answer in paragraph 17 won’t get cited. Content with clear structure, specific claims, and verifiable information will.

The Window Is Open

Meta will integrate Manus into their ecosystem, potentially reaching billions of users. OpenAI has Operator. Anthropic has Claude’s computer use. Google has their own agent ambitions.

2026 is when agentic AI goes mainstream.

The publishers who optimize now—before everyone else catches on—will be the ones AI agents learn to trust. The llms.txt files being created today become the training data and preference signals for autonomous systems tomorrow.

We’re at the same inflection point as early SEO. The question isn’t whether AI agents will read your content. It’s whether they’ll cite it.

FAQ

What’s the difference between AI chatbots and AI agents?

Chatbots respond to prompts. Agents complete tasks autonomously. When you ask ChatGPT a question, it generates an answer. When you give Manus a task like “research competitors,” it opens browsers, navigates websites, reads content, and delivers a finished report—without step-by-step instructions.

Will AI agents respect robots.txt?

Increasingly, no. Browser-based agents like Manus operate through virtual Chrome sessions, making them indistinguishable from human visitors. Traditional robots.txt blocks don’t apply. This is why signaling what to prioritize (via llms.txt) matters more than signaling what to block.

How do I know if AI agents are visiting my site?

You often won’t. Agents using browser automation show up as regular Chrome traffic in your analytics. Some agents identify themselves (PerplexityBot, GPTBot), but stealth crawlers don’t. The practical approach: optimize for visibility rather than trying to track every visit.

Does this affect small sites or just big publishers?

Both, but differently. AI agents completing tasks like “find the best local plumber in Phoenix” or “compare pricing for project management tools” will read small business sites. The question is whether your content answers the query well enough to be cited—or whether a competitor’s does.

What should I do right now?

Three things: Add an llms.txt file that explains what your site does and what content matters most. Ensure your schema markup (Organization, Article, FAQ) is in place. Structure your content so answers are findable—clear headings, specific claims, expertise signals. The basics of AI visibility don’t change because agents are autonomous; they just become more urgent.

Malcolm Michaels is the founder of HeyTC and creator of GetCited. This post was developed with AI assistance—human direction, AI drafting, human editing.