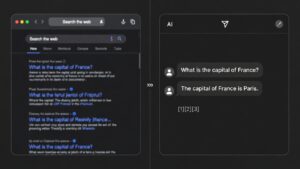

Which AI Crawlers Actually Matter in 2026

The AI crawler landscape shifted dramatically in 2025. Bytespider, which dominated at 42% of AI traffic, collapsed to 7%. GPTBot surged from 5% to 30%. And 13.26% of AI bots now ignore robots.txt entirely, up from 3.3% a year ago.

I maintain a database of 38 AI crawlers for GetCited. Here’s what actually matters based on the latest traffic data.

The Real Numbers

Cloudflare’s data shows AI bots (excluding Googlebot) now account for 4.2% of global HTML requests. Add Googlebot’s 4.5%, and nearly 9% of web traffic is crawler-related.

But the mix changed fast:

| Crawler | 2024 Share | 2025 Share | Change |

|---|---|---|---|

| GPTBot | 5% | 30% | +305% |

| Meta-ExternalAgent | n/a | 19% | New entry |

| Bytespider | 42% | 7% | -85% |

| ClaudeBot | 11.7% | 5.4% | -46% |

| Amazonbot | n/a | n/a | -35% |

ChatGPT-User requests grew 2,825%. PerplexityBot grew 157,490% in raw request volume. These aren’t typos.

The Uncomfortable Truth About Crawl-to-Referral Ratios

Here’s what nobody talks about: most AI crawling sends almost no traffic back.

Research shows Anthropic’s crawl-to-referral ratio as high as 500,000:1. They crawl 500,000 pages for every 1 visitor they send. OpenAI’s ratio: 3,700:1.

The breakdown of why AI crawlers hit your site:

- 80%: Training data collection

- 18%: AI search indexing

- 2%: User-triggered actions

If you’re allowing crawlers hoping for traffic, the math isn’t great for most of them. Perplexity is the exception: they actually send referral traffic because they cite sources visibly. How to get cited by Perplexity.

Which Crawlers to Allow

Based on current data, here’s how I’d prioritize:

For AI Search Citations (Allow These)

These crawlers are attached to products that cite sources and send traffic:

PerplexityBot: The clear winner for publishers. 157,490% growth and they show citations on every answer. Real referral traffic. Docs

OAI-SearchBot: ChatGPT’s search feature. Newer, growing. Docs

YouBot: You.com’s search. Smaller but cites sources. Docs

DuckAssistBot: DuckDuckGo’s AI features. Privacy-focused audience. Docs

xAI-Grok-Bot: Grok now cites sources, so there’s visibility upside. Docs

For User-Triggered Access (Allow These)

These crawlers fetch pages when a user asks the AI to look something up:

ChatGPT-User: Up 2,825%. When someone asks ChatGPT to browse a URL, this is what hits your server.

Perplexity-User: Real-time fetches for Perplexity queries.

Claude-User: Claude’s equivalent.

Training Crawlers (Your Call)

These collect data for model training. No direct citation benefit:

GPTBot: Dominates at 30% of AI traffic. Feeds ChatGPT training and features. Blocking it means invisibility to ChatGPT entirely, but the crawl-to-referral ratio is poor. Docs

ClaudeBot: Down 46% but still significant. Training + some real-time access. Docs

Google-Extended: Gemini and AI Overviews. Separate from Googlebot (search rankings unaffected). Docs

CCBot: Common Crawl’s nonprofit archive. Used by many AI companies for training data. Docs

Meta-ExternalAgent: Strong new entry at 19% share. Aggressive crawling for Meta AI.

anthropic-ai: Anthropic’s training crawler. 500,000:1 crawl-to-referral ratio.

Which Crawlers to Block

Bytespider (ByteDance): Collapsed from 42% to 7% market share, but still known for aggressive behavior. No documentation, no transparency. I block it.

Stealth crawlers: Here’s the problem. ChatGPT’s Atlas browser and Operator agent use standard Chrome user-agent strings. They’re indistinguishable from regular browser traffic. You can’t block them via robots.txt. There’s no solution for this yet. More on what agentic AI means for publishers.

The Blocking Landscape

For context on what other publishers are doing:

- 5.6 million websites now block GPTBot (up 70% since July 2025)

- 5.8 million websites block ClaudeBot

The trend is clear: publishers are increasingly blocking training crawlers while trying to preserve search/citation visibility.

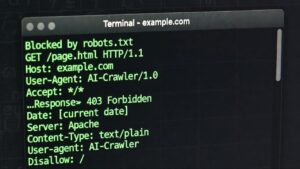

The Enforcement Problem

robots.txt is advisory. Bots can ignore it.

And increasingly, they do. 13.26% of AI bot requests ignored robots.txt directives in Q2 2025, up from 3.3% in Q4 2024. That’s a 4x increase in non-compliance in six months.

If a crawler ignores your robots.txt, your options are:

- Block by user-agent at the server level (.htaccess or nginx)

- Rate-limit suspicious traffic

- Accept that some crawling is uncontrollable

The stealth crawlers using Chrome user-agents? There’s currently no way to block those without blocking real users.

My Recommendations

If you want maximum AI visibility: Allow everything. Accept that most crawling is for training with poor referral returns, but you’ll be in the training data and searchable across all AI products.

If you want citations without training contribution:

# Allow search/citation crawlers

User-agent: PerplexityBot

Allow: /

User-agent: OAI-SearchBot

Allow: /

User-agent: ChatGPT-User

Allow: /

User-agent: xAI-Grok-Bot

Allow: /

# Block training crawlers

User-agent: GPTBot

Disallow: /

User-agent: anthropic-ai

Disallow: /

User-agent: Google-Extended

Disallow: /

User-agent: CCBot

Disallow: /If you want to block aggressively: Block all AI crawlers and accept invisibility to AI products. Join the 5.6 million sites blocking GPTBot.

Quick Reference

| Crawler | Share | Recommendation |

|---|---|---|

| GPTBot | 30% | Your call: dominates but poor referral ratio |

| PerplexityBot | Growing fast | Allow: best for citations |

| Meta-ExternalAgent | 19% | Block: aggressive, training only |

| Bytespider | 7% (down from 42%) | Block: opaque, aggressive |

| ClaudeBot | 5.4% | Your call: declining share |

| ChatGPT-User | +2,825% | Allow: user-triggered |

| OAI-SearchBot | Growing | Allow: ChatGPT search |

| xAI-Grok-Bot | n/a | Allow: now cites sources |

Full list with user-agent strings: AI Crawlers Reference

FAQ

Should I allow GPTBot?

Depends on your goals. It’s 30% of AI crawler traffic and feeds ChatGPT’s features. But the crawl-to-referral ratio is 3,700:1. You’re contributing training data, not getting much traffic back. I’d allow OAI-SearchBot and ChatGPT-User while blocking GPTBot if you want ChatGPT search visibility without training contribution.

Why did Bytespider collapse?

Unclear. ByteDance hasn’t commented. Possibly reduced crawling after backlash, possibly shifted to other methods. Either way, it’s no longer the dominant force it was.

Can I stop stealth crawlers?

Not currently. ChatGPT’s Atlas and Operator use standard Chrome user-agents. Blocking them means blocking real Chrome users. This is an unsolved problem.

Do AI companies respect robots.txt?

Less than they used to. 13.26% of requests now ignore robots.txt, up from 3.3% a year ago. The major companies (OpenAI, Anthropic, Google) publicly commit to respecting it, but enforcement is impossible.

Is blocking even worth it?

For training crawlers, maybe. You’re not getting traffic from them anyway. For search/citation crawlers, blocking hurts your visibility with no upside. The nuanced approach is blocking training while allowing search.

Malcolm Michaels is the founder of HeyTC and creator of GetCited. This post was developed with AI assistance: human direction, AI drafting, human editing.